Abbasi, Ali; Nooralinejad, Parsa; Pirsiavash, Hamed; Kolouri, Soheil. “BrainWash: A Poisoning Attack to Forget in Continual Learning.” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2024, pp. 24057-24067, https://doi.org/10.1109/CVPR52733.2024.02271.

Continual learning, a field within deep learning, has become increasingly important as it offers solutions to the challenge of learning new tasks over time without forgetting previous ones. However, a largely overlooked issue is how vulnerable continual learning models are to attacks that can intentionally make them forget what they’ve learned. In this paper, we introduce a new attack method called “Brain-Wash,” which is designed to make a continual learner forget previously learned tasks by poisoning the data it’s learning from. By adding Brain-Wash noise to various existing models, we show how a trained continual learning model can be made to forget its past tasks dramatically, even when using methods that are supposed to prevent this. What makes our approach particularly interesting is that the attacker doesn’t need access to the data from past tasks; they only need the model’s current parameters and the data from the most recent task. Our extensive experiments demonstrate how effective Brain-Wash is, showing that it can significantly reduce the performance of different continual learning methods that use regularization and memory replay techniques. Our code is available online for others to explore: https://github.com/mint-vuIBrainwash

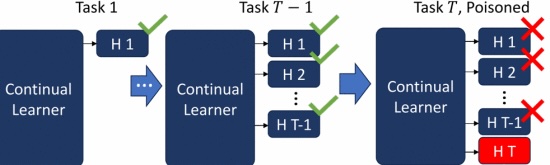

Figure 1.

BrainWash is a poisoning attack targeting continual learning systems. It sabotages a task so that, upon learning it, the system’s rate of forgetting previously learned tasks is increased.