Li, J., Chen, J., Tang, Y., Wang, C., Landman, B. A., & Zhou, S. K. (2023). Transforming medical imaging with Transformers? A comparative review of key properties, current progresses, and future perspectives. Medical Image Analysis, 85, 102762. doi: 10.1016/j.media.2023.102762

Transformer, a recent advancement in deep learning, has become widely used in natural language processing and computer vision. Since medical imaging is similar to computer vision, it’s natural to wonder if Transformer models can improve medical imaging. In this paper, we explore this question.We start by explaining the basics of Transformer models, comparing them with convolutional neural networks (CNNs), and highlighting the key features that define Transformers. Then, we provide a detailed review of how Transformer-based methods are being used in medical imaging, covering areas like image segmentation, recognition, detection, registration, reconstruction, and enhancement. What makes our review unique is that it organizes the information around the key properties of Transformers (mainly by comparing them to CNNs) and their architecture types, which show how Transformers and CNNs can be combined. This organization helps readers better understand the reasoning behind these approaches.

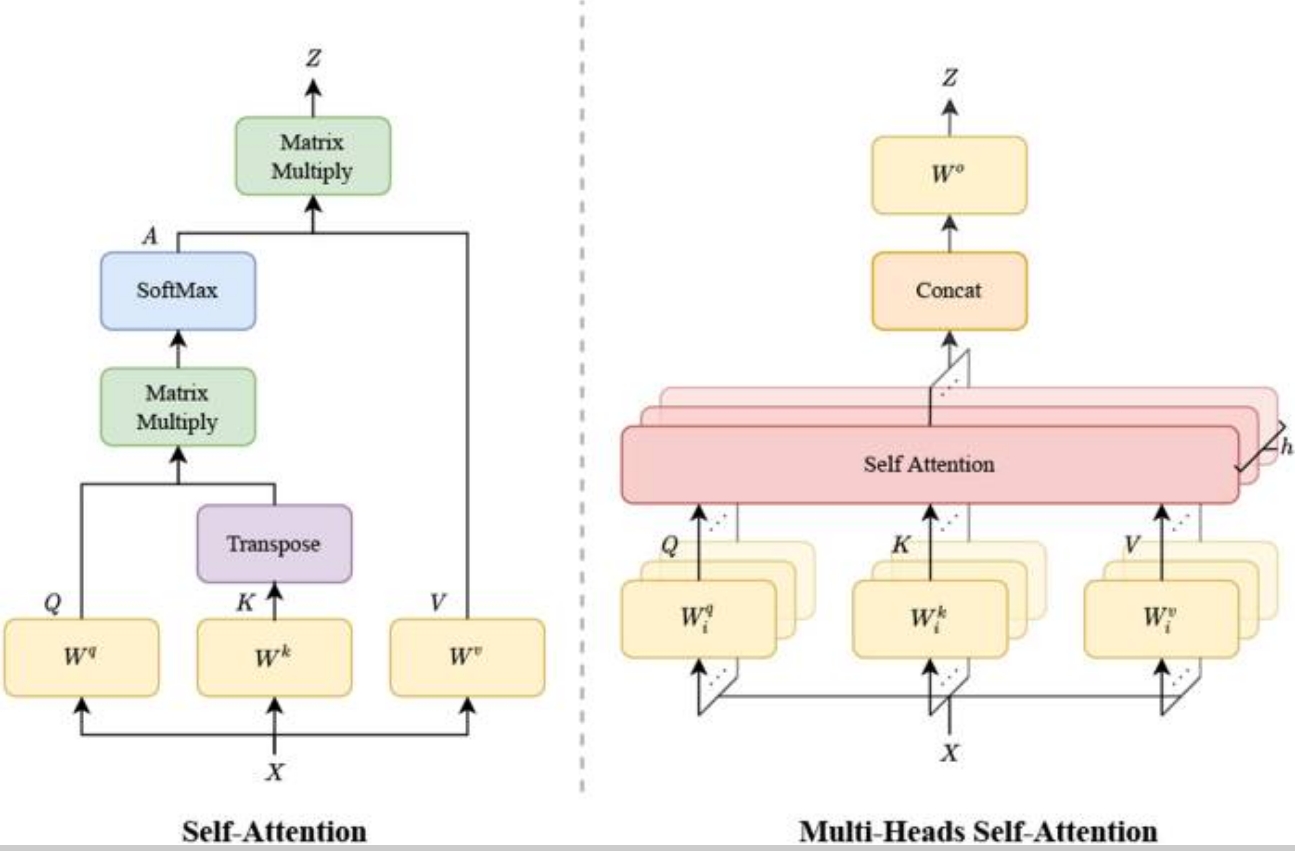

Fig. 1

Details of a self-attention mechanism (left) and a multi-head self-attention (MSA) (right). Compared to self-attention, the MSA conducts several attention modules in parallel. The independent attention features are then concatenated and linearly transformed to the output.