Maier-Hein, L., Reinke, A., Godau, P., et al. (2024). Metrics reloaded: recommendations for image analysis validation. Nature Methods, 21(2), 195-212. doi: 10.1038/s41592-023-02151-z

There is growing evidence that problems with validating machine learning (ML) algorithms are a global issue that’s often overlooked. In biomedical image analysis, the performance metrics used to evaluate these algorithms sometimes don’t reflect what’s actually important in the field, making it harder to measure scientific progress and apply ML techniques in practice. To address this, we developed Metrics Reloaded, a comprehensive framework designed to help researchers select the right metrics for their problems. Created by a large international team using a multi-step process, this framework introduces a new idea called a problem fingerprint—a detailed representation of the problem that includes all relevant factors, such as the research focus, dataset, and the output of the algorithm. With this fingerprint, users are guided to select and apply the most appropriate validation metrics while being warned about common mistakes. Metrics Reloaded is intended for image analysis tasks, like classifying images, detecting objects, and segmenting images at different levels (e.g., pixels or objects). To make it easy to use, we built an online tool for the framework. By improving how ML validation is done across different fields, Metrics Reloaded helps standardize the way we validate algorithms. We’ve shown its usefulness through various biomedical examples.

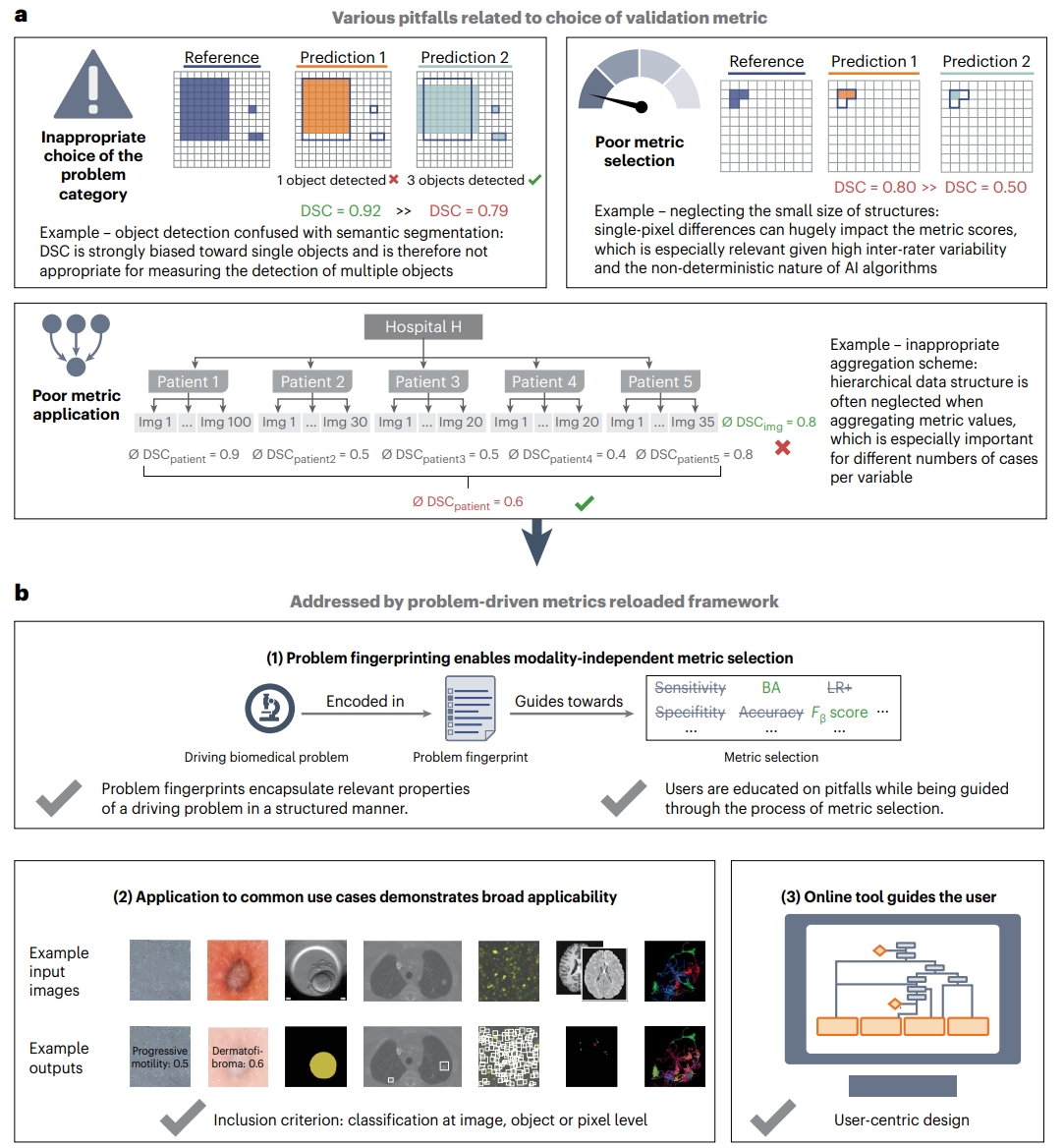

Fig. 1 | Contributions of the Metrics Reloaded framework. a, Motivation: Common problems related to metrics typically arise from inappropriate choice of the problem category (here: ObD confused with SemS; top left), poor metric selection (here: neglecting the small size of structures; top right) and poor metric application (here: inappropriate aggregation scheme; bottom). Pitfalls are highlighted in the boxes; ∅ refers to the average DSC values. Green metric values correspond to a good metric value, whereas red values correspond to a poor value. Green check marks indicate desirable behavior of metrics; red crosses indicate undesirable behavior. Adapted from ref. 27 under a Creative Commons license CC BY 4.0. b, Metrics Reloaded addresses these pitfalls. (1) To enable the selection of metrics that match the domain interest, the framework is based on the new concept of problem fingerprinting, that is, the generation of a structured representation of the given biomedical problem that captures all properties that are relevant for metric selection. Based on the problem fingerprint, Metrics Reloaded guides the user through the process of metric selection and application while raising awareness of relevant pitfalls. (2) An instantiation of the framework for common biomedical use cases demonstrates its broad applicability. (3) A publicly available online tool facilitates application of the framework. Second input image reproduced from dermoscopedia (ref. 58) under a Creative Commons license CC BY 4.0; fourth input image reproduced with permission from ref. 59, American Association of Physicists in Medicine.