Cui, C., Yang, H., Wang, Y., Zhao, S., Asad, Z., Coburn, L. A., Wilson, K. T., Landman, B. A., & Huo, Y. (2023). Deep multimodal fusion of image and non-image data in disease diagnosis and prognosis: a review. Progress in Biomedical Engineering, 5(2), 22001. doi: 10.1088/2516-1091/acc2fe

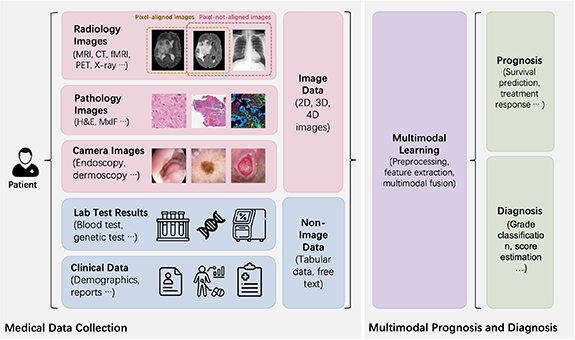

The rapid growth of diagnostic technologies in healthcare is increasing the need for doctors to manage and combine different types of data that are generated during regular medical practice. For example, when diagnosing and planning treatment for a cancer patient, doctors use various images (like radiology, pathology, and camera images) and non-image data (such as clinical and genetic data). However, making decisions with this information can be subjective and vary greatly between doctors. With recent progress in deep learning technology, many efforts are focused on finding ways to combine and process all this information to make more objective, data-driven decisions in healthcare. This paper reviews the latest research on how to solve this challenge. It covers the current workflows for combining different types of data, methods for merging these data sources, how well these methods perform, their applications in diagnosing and predicting diseases, and the challenges and future directions in this field.

Figure 1. The scope of this review is presented. multimodal data containing image data (e.g. radiology images and pathology images) and non-image data (e.g. genomic data and clinical data) are fused through multimodal learning methods for diagnosis and prognosis of diseases.