Liu, J., Zhang, Y., Chen, J.-N., Xiao, J., Lu, Y., Landman, B. A., Yuan, Y., Yuille, A., Tang, Y., & Zhou, Z. (2023). CLIP-driven universal model for organ segmentation and tumor detection. In Proceedings of the IEEE International Conference on Computer Vision (pp. 21095–21107). doi: 10.1109/ICCV51070.2023.01934

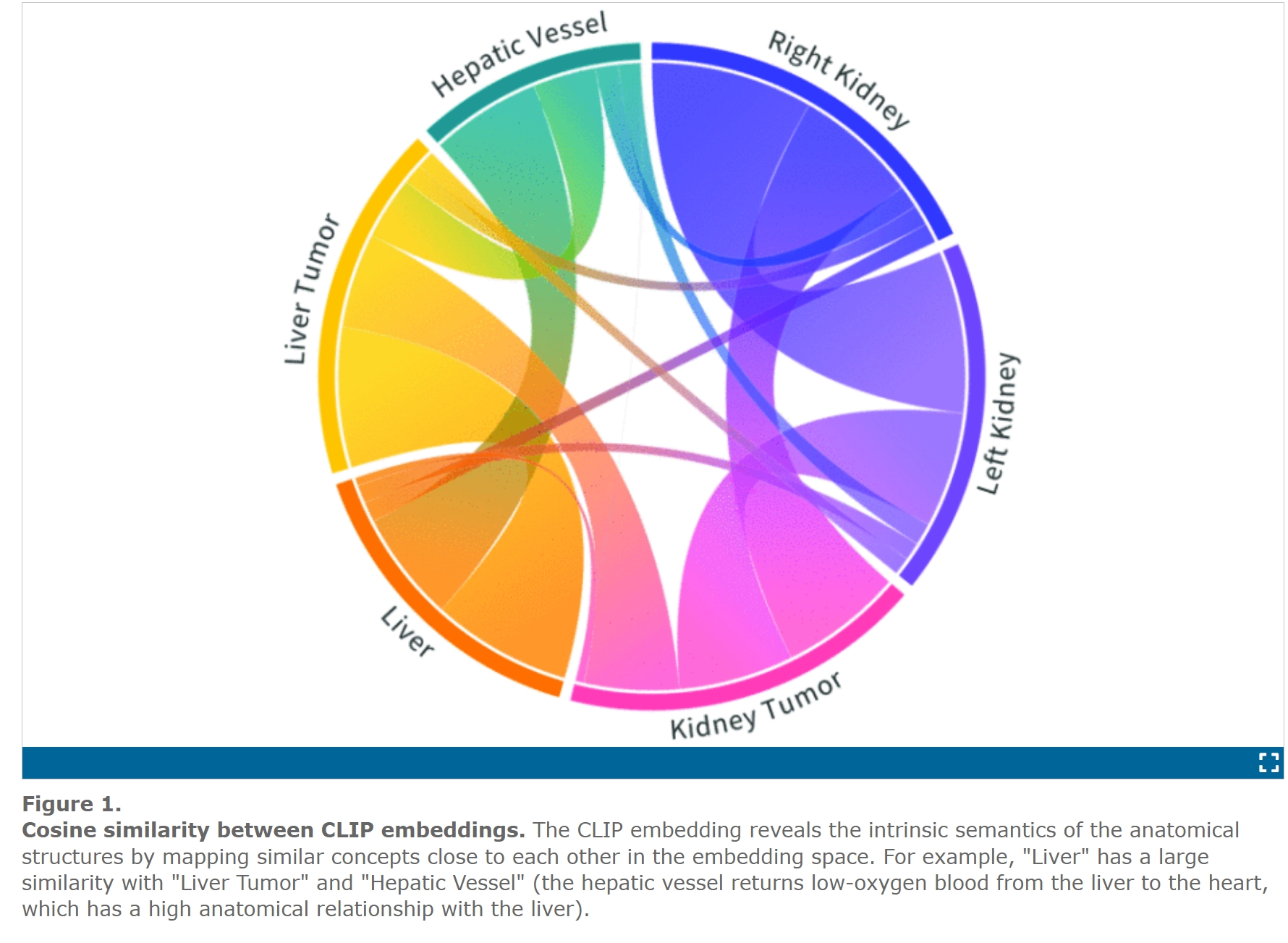

An increasing number of public datasets have greatly improved automated systems for organ segmentation and tumor detection. However, because each dataset is small and not fully labeled, and because the datasets mostly focus on specific organs or tumors, the resulting models often can only work with certain organs or tumors and don’t understand the full anatomy. To solve this, we introduced the CLIP-Driven Universal Model, which uses a method called Contrastive Language-Image Pre-training (CLIP) to improve segmentation models. This method allows the model to learn and understand the relationships between body parts, helping it segment 25 organs and 6 types of tumors. The model was trained using 14 datasets with 3,410 CT scans and was tested on 6,162 CT scans from 3 other datasets. Our model outperformed others, ranking first on the Medical Segmentation Decathlon leaderboard and achieving top results on another challenge. Additionally, our Universal Model is 6 times faster and works better with CT scans from different sources, showing it is more flexible and capable of learning new tasks.