Collins, Benjamin X.; Belisle-Pipon, Jean-Christophe; Evans, Barbara J.; Ferryman, Kadija; Jiang, Xiaoqian; Nebeker, Camille; Novak, Laurie; Roberts, Kirk; Were, Martin; Yin, Zhijun; Ravitsky, Vardit; Coco, Joseph; Hendricks-Sturrup, Rachele; Williams, Ishan; Clayton, Ellen W.; Malin, Bradley A. “Addressing ethical issues in healthcare artificial intelligence using a lifecycle-informed process”. JAMIA Open, vol. 7, no. 4, 2024, p. ooae108. DOI: 10.1093/jamiaopen/ooae108.

Objectives: Artificial intelligence (AI) develops through a process of testing, use, and improvement, which can be seen as a lifecycle. Different stakeholders may have varying views on the ethical issues involved in this fast-developing technology, sometimes missing potential problems. By identifying ethical issues at each stage of the AI lifecycle, we can improve decision-making and avoid negative outcomes. Materials and methods: We reviewed existing AI lifecycles in healthcare literature to find common ethical themes. These themes were used to create a more complete lifecycle that covers a wider range of ethical concerns. We then examined the potential benefits and risks of AI at each step of the lifecycle to identify ethical questions and possible conflicts. Three case studies were used to demonstrate how ethical issues can emerge at different stages. Results, discussion, and conclusion: The case studies show that using a lifecycle approach to analyze AI helps map out its effects at each stage, making it easier to weigh benefits and harms. This approach is useful for various stakeholders and can help improve communication about ethical concerns among patients, healthcare providers, researchers, and others.

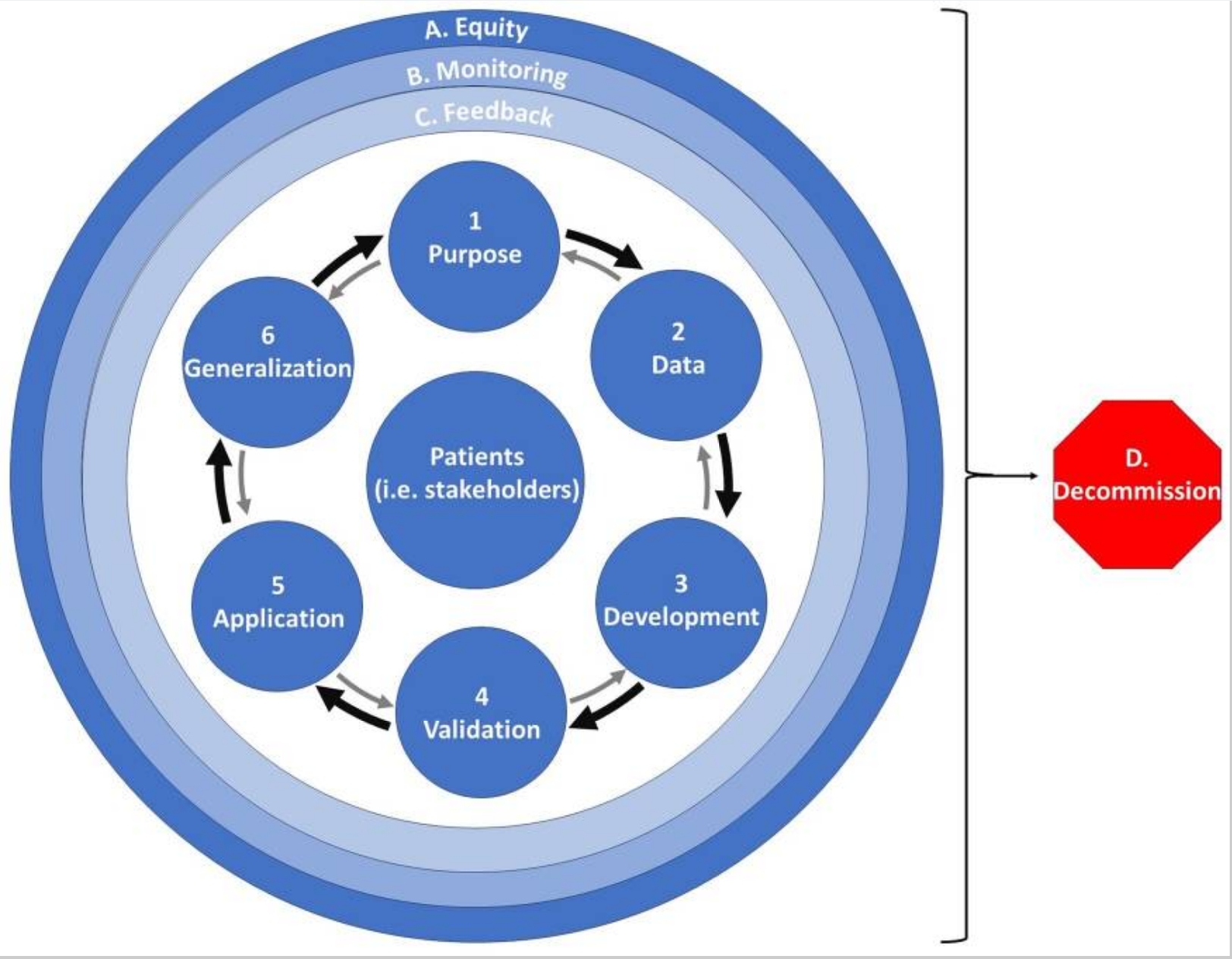

Fig.1 Healthcare AI ethics lifecycle with patients as stakeholders in the center as a use-case.