Rao, M.; Qin, Y.; Kolouri, S.; Wu, J.Y.; Moyer, D. “Zero-shot prompt-based video encoder for surgical gesture recognition.” International Journal of Computer Assisted Radiology and Surgery, 2024, DOI: 10.1007/s11548-024-03257-1.

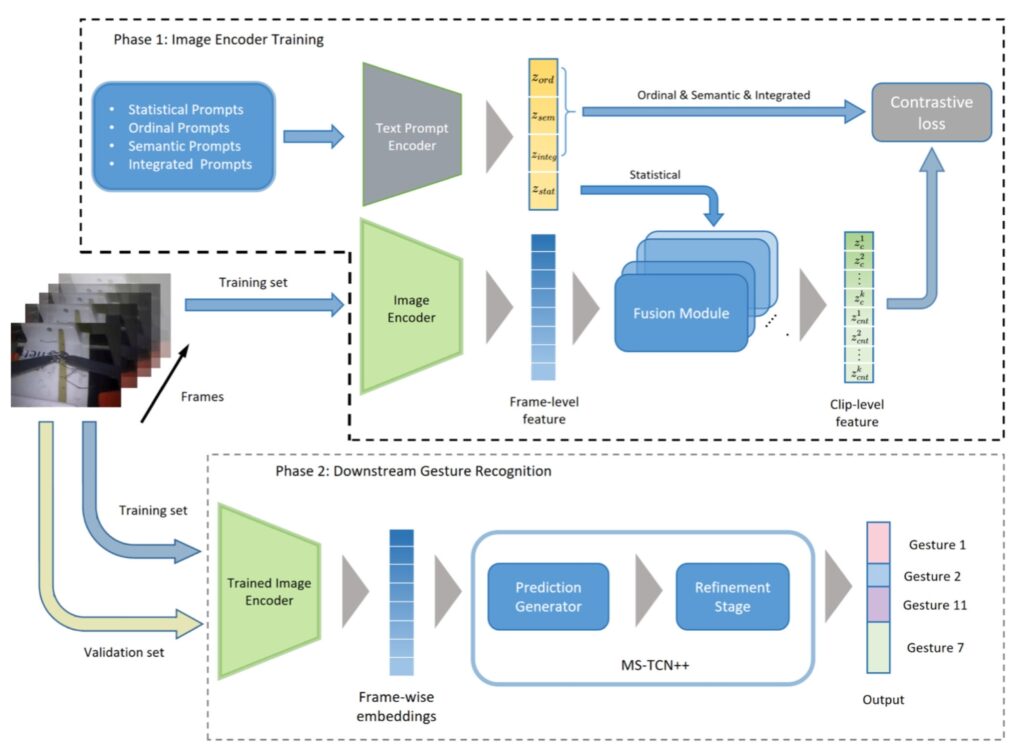

This study explores how to build a system that can recognize surgical gestures without needing a large dataset for every possible gesture. Instead of collecting lots of labeled data, the goal is to create a model that can identify new, unseen gestures (known as zero-shot recognition). The researchers used a pre-trained model, CLIP, which understands both images and text, and adapted it for recognizing surgical gestures in videos. Their experiments showed that this method works better than traditional models, especially when the system needs to identify gestures it hasn’t been trained on. They also found that adding text descriptions during the training process improved the model’s performance. The approach, called “bridge-prompt,” shows great potential for surgical robots, as it can recognize a variety of gestures without needing retraining for each new one.

Fig. 1. Wasserstein Task Embedding framework. Given labeled task distributions and with input space , WTE first maps them into as probability distributions and by label embedding via MDS, then apply WE to get vectors and with respect to a fixed reference measure . Here is the size of reference set.