Baxter, Hunter, Huang, Yu, & Leach, Kevin. (2024). Genetic improvement for DNN security. In Proceedings of the 13th International Genetic Improvement Workshop (GI@ICSE 2024), Lisbon, Portugal, April 16, 2024, Pages 11-12. https://doi.org/10.1145/3643692.3648261

This study explores a novel application of Genetic Improvement (GI) in enhancing the security of Deep Neural Networks (DNNs) against adversarial threats, particularly model extraction attacks. Traditionally, GI has been used to improve DNN architectures and optimize their performance on specialized hardware clusters. However, with the increasing prevalence of attacks aimed at stealing or replicating machine learning models, this work proposes using GI to create a defense strategy rather than enhancing performance.

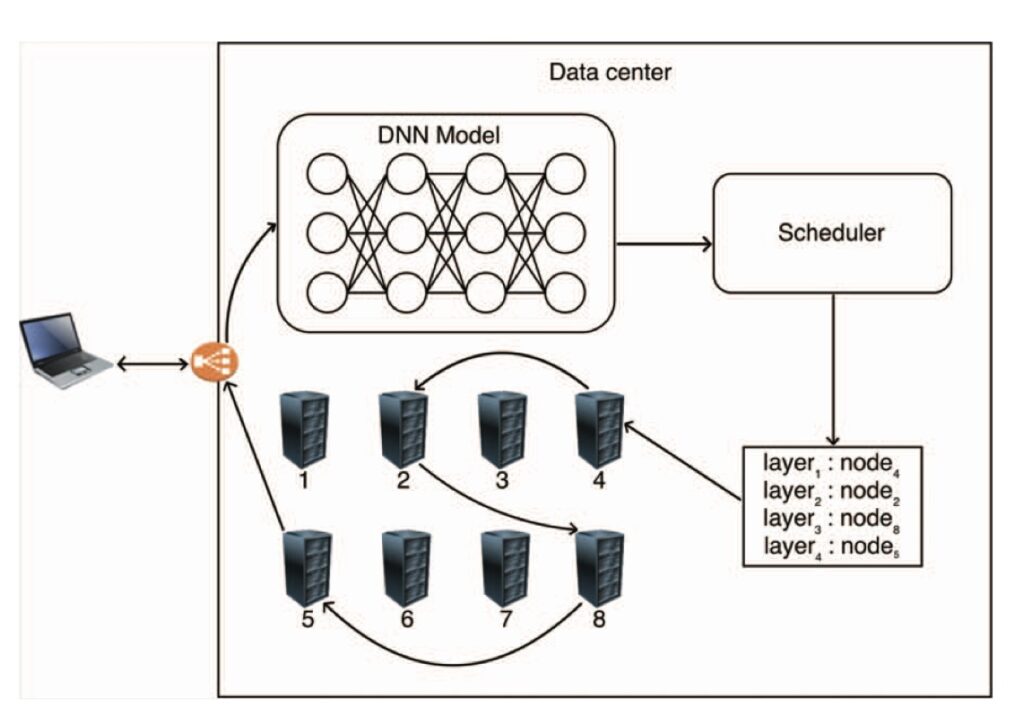

The authors suggest leveraging GI to introduce variability in the scheduling of DNN operations, creating a “moving target defense” that makes it harder for attackers to extract the model’s architecture or parameters. This defense strategy, called probabilistic isolation, diversifies the operator parallelism in the DNN, ensuring that the model behaves unpredictably from the perspective of an attacker, thereby reducing the risk of successful model extraction.

This approach represents a shift from the typical use of GI for performance optimization to using it as a tool for enhancing security in Machine Learning as a Service (MLaaS) models. By introducing variability in how DNN operations are executed, the defense adds an additional layer of protection, making it a promising technique for safeguarding AI models in adversarial environments.