Can Cui, Ruining Deng, Quan Liu, Tianyuan Yao, Shunxing Bao, Lucas W. Remedios, Bennett A. Landman, Yucheng Tang, and Yuankai Huo. “All-in-SAM: from Weak Annotation to Pixel-wise Nuclei Segmentation with Prompt-based Finetuning.” Journal of Physics: Conference Series, vol. 2722, 2023, The 4th Asia Conference on Computers and Communications (ACCC 2023), 15-17 Dec. 2023,

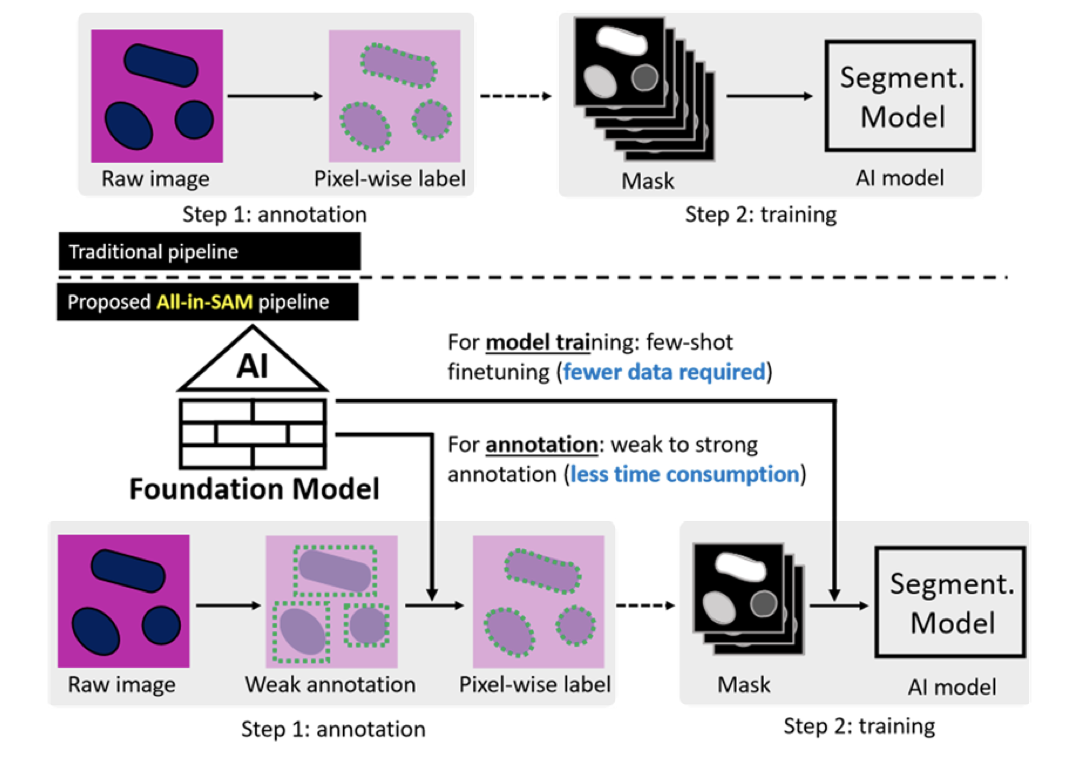

Researchers have developed a new approach to improve the Segment Anything Model (SAM), which is used for identifying specific areas in images, especially in medical images. The original model needed manual input to work effectively, which can be time-consuming and labor-intensive. The new method, called all-in-SAM, eliminates the need for manual input during the analysis phase. Instead, it uses minimal initial hints to generate detailed annotations, which are then used to refine the model. This new approach has shown better performance in identifying cell nuclei in medical images compared to other advanced methods. Additionally, it works well even with limited initial hints, making it efficient and effective.